Are exposure & vulnerability factors more important than trying to predict the weather?

January renewals have seen higher pricing baselines drive catastrophe reinsurance rate increases by 50% or more, as well as bringing much tighter terms, after another year in which the reinsurance industry could barely earn its cost of capital. Meanwhile, the earthquakes that struck Türkiye and Syria in February underlined that natural catastrophes include more than flood and hurricane damage.

For a sixth consecutive year, the reinsurance industry is on course to deliver poor annual results to investors already dubious of reinsurers’ ability to price catastrophe risk, including secondary perils. How has an industry that relies on sound risk assessment and management found itself in a situation where the baseline price needs to be raised so drastically? How has catastrophe-risk assessment slipped into becoming a short-term, cyclical and panic-ridden activity?

A long-term view

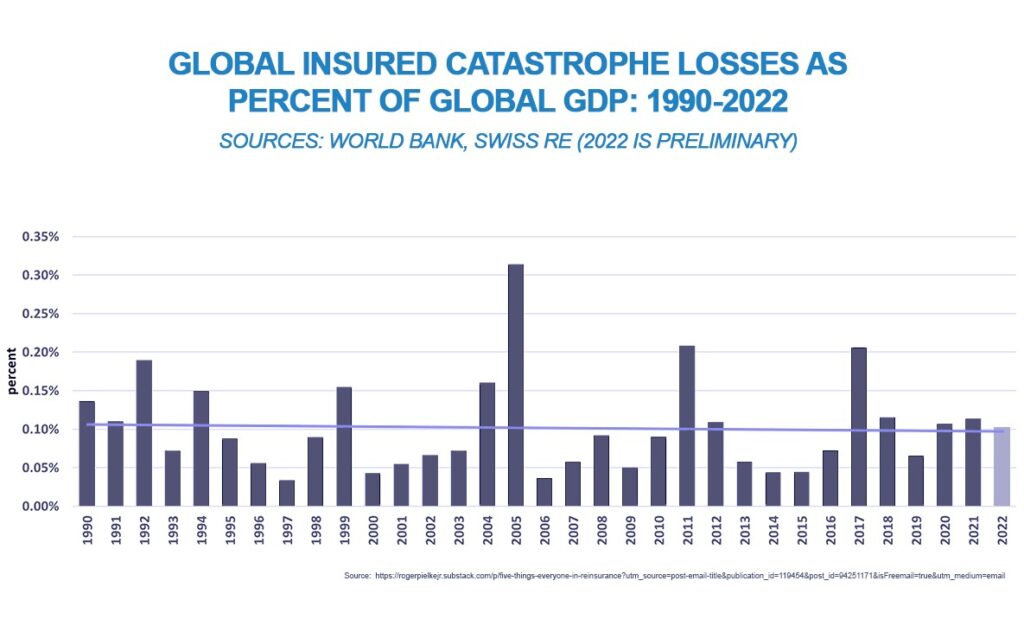

Over the long term, the reinsurance industry is adept at managing exposure and losses. Last year saw increased vulnerability causing heavy secondary-perils losses from floods in Australia and Pakistan, hail in France and freezing temperatures from a winter storm in the US. Yet, despite “billion-dollar disasters” dominating news headlines of late, data from Swiss Re and the World Bank show that there is, as a percentage of GDP, if anything, a downward trend in global insured catastrophe losses over the last three decades (1990-2022). There is, however, a propensity to base expectations on natural weather catastrophes over shorter time periods, especially after a hiatus. The temptation to ignore the 30-year mean or longer interdecadal influences might explain why catastrophe reinsurance is experiencing a minor wobble as expectations on returns for investors shift from the long term to the short term.

That is not to say that the Middle East and GCC countries are immune to the effects of a warming planet and damaging weather hazards. Long-term records show that the average maximum temperatures in Saudi Arabia have increased by 0.6⁰C per decade between 1978-2019. [1]

Oman is most at risk from landfalling cyclones forming in the Arabian Sea, as evidenced by cyclone Gonu in 2007, which caused US$ 4 billion in damage and killed at least 50 people.[2] It remains the most intense cyclone to strike Oman since storm-intensity records began in 1979. Oman’s coastline is also the most exposed of the GCC nations in terms of storm surges and tsunamis,[3] followed by the UAE’s eastern coastline on the Gulf of Oman. Whilst much of the region experiences water stress, passing cyclones that do not make landfall but transit up the Arabian Sea and through the Gulf of Oman can often lead to intense precipitation and damaging floods in the region. No natural hazards have reached the enormity of Oman’s 1890 tropical cyclone in terms of mortality – some 727 people were reported to have lost their lives from flooding, a death toll around 14 times higher than Gonu[4] – and yet more human and economic assets are exposed than ever before. Elsewhere in the region, the UAE population has grown from around 70,000 in 1950, to surpass 10 million residents in 2022.[5] In terms of exposure, massive real-estate development, aggressive urban sprawl, and increasing desertification would suggest a rise in vulnerability to natural hazards. After all, the total market value of property in Dubai alone is estimated to have reached US$ 530 billion in 2020, but S&P Global nevertheless believes that GCC insurers are exposed to relatively few environmental risks. “Physical risks from natural catastrophes are relatively low in the region”, it says, “and large risks are typically ceded to international reinsurers”.[6]

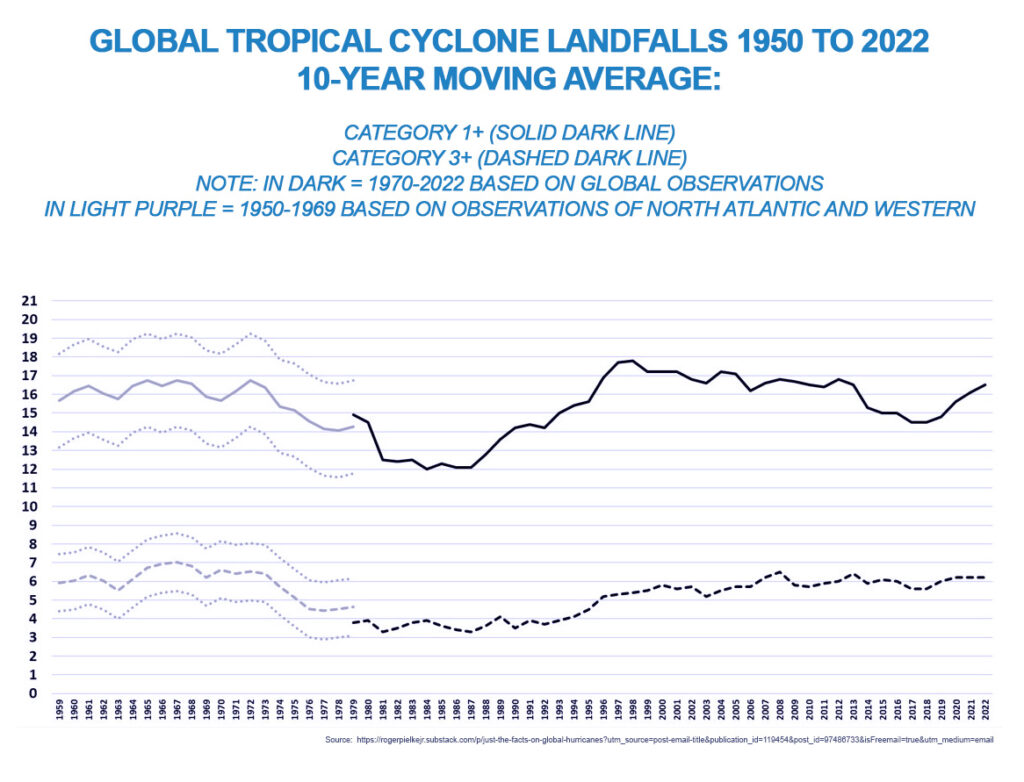

North American storms, of which US hurricanes form the overwhelming majority, account for around 60% of global insured losses. It is perhaps not surprising, then, that panic over recent losses from Hurricane Ian feeds into the general uncertainty about pricing catastrophe risk against the backdrop of decreasing investor confidence, even though 2022, with 18 hurricanes making landfall in North America and five major hurricanes making landfall globally, was close to the median for the last 50 years (16 and five for major hurricanes, respectively). “So, it’s clearly going up,” said Swiss Re’s CEO at the recent WEF summit in Davos, “this clear impact of climate change and there’s a certain uncertainty right now about the pricing of that”. Swiss Re also noted that Hurricane Ian was “the second-costliest insured loss ever” after Hurricane Katrina in 2005. Looking at the historical data set starting in 1900, adjusted for 2022 values, it is clear, however, that the costliest and second-costliest hurricanes on record occurred in 1926 and 1900, respectively. In other words, an examination of the historical record tells us that many hurricanes would cause significantly more damage were they to occur today. We need only to understand natural decadal and interdecadal variability through historical records to conclude that much more damaging storms are possible with or without the impact of climate change.

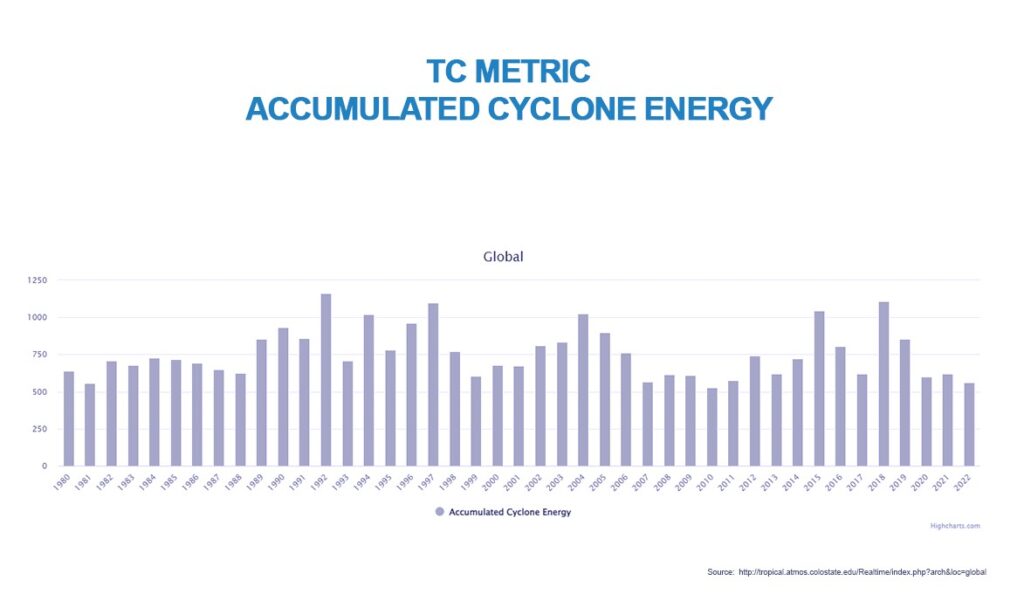

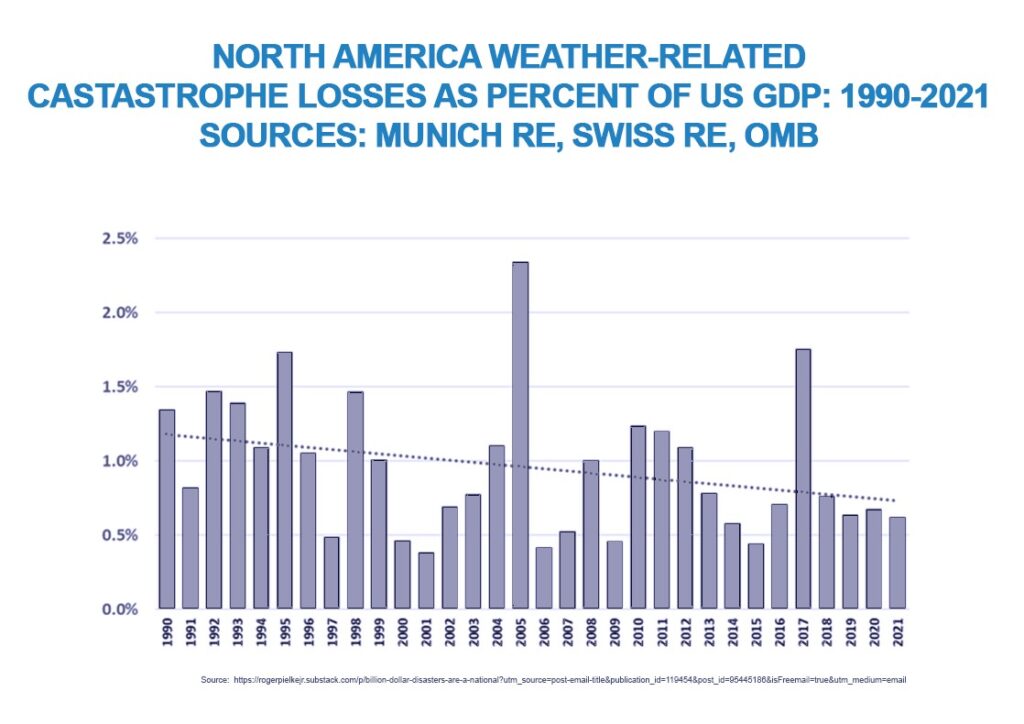

As discussed below, loss data shows that North American weather-hazard disaster losses as a percentage of US GDP have in fact dropped by almost half since 1990. Overall, the total number of hurricanes around the world in 2022 (including those making landfall) hit 86 against a 40-year median of 87, while the total number of major hurricanes was 17 against a median of 24. Crucially, an examination of the Accumulated Cyclone Energy (ACE) over the last 40 years – a value that combines both cyclone frequency and intensity – using Colorado State University’s Department of Atmospheric Science data set reveals that there is no trend in global ACE over that period (1980-2022).

What does this mean for the insurance and reinsurance industry? It is probable that the panic is driven by more than one factor, but is the industry spending too much energy trying to guess future weather hazards and not enough on understanding exposure and the changing nature of vulnerability?

Before we examine that question in greater detail, we first need to address one important factor in assessing relative risk. Insurers, reinsurers and society at large need a reliable mechanism by which they can estimate the extent of present and future risk relative to historical data. To avoid falling into the trap of confusing and conflating economic loss trends with weather-hazard trends, one of the most reliable mechanisms is “normalisation”. Highlighting the inadequacy of adjusting for inflation alone, the importance of using GDP as part of the mechanism to normalise loss data is discussed below.

Normalising loss data for exposure

Why is it important to normalise loss data? Put simply, it allows insurers and reinsurers to estimate the potential loss, for example, of historical hurricanes if they were to make landfall today, and therefore make more accurate estimates of potential future loss risk using historical data in present-day conditions. The assumption that is made in order to properly adjust historical hazard impacts to 2022 values, is that catastrophe losses are proportional to wealth, population, social factors and inflation.

Using data from Munich Re’s NatCatSERVICE and Swiss Re’s sigma databases, loss data adjusted for GDP, exposure, inflation and socioeconomic trends shows that North American weather-related catastrophe losses as a percentage of US GDP have almost halved over the last 30 years, while global insured catastrophe losses as a proportion of global GDP continue to show no upward trend over that period. Supported by a modern scientific understanding of trends in vulnerability, set against a backdrop of a growing economy and larger loss potential, this data would seem to be at odds with the NOAA’s “billion-dollar disaster” count, but for one flaw; the NOAA’s count neglects historical events adjusted for changes in exposure. As the NOAA itself states, “it is difficult to attribute any part of the trends in losses to climate variations or change, especially in the case of billion-dollar disasters . . . The magnitude of such increasing trends [in billion-dollar disasters] is greatly diminished when applied to data normalised for exposure”.

If data is not adjusted for exposure and other socioeconomic developments, economic loss trends can be mistaken for weather hazard trends, leading to inaccurate assumptions.

How might this affect the insurance and reinsurance industry? In short, it can lead to gross underestimates of exposure. As Swiss Re noted in January, “[the US major-hurricane drought] led to industry underestimation of ever-growing property exposures due to a rise in values at risk in exposed locations, and continued urban sprawl and economic growth”. In other words, an absence of economic losses during the drought blinded some to ever-increasing exposure from changes in wealth, population and society. When natural variability saw hazards return closer to the long-term median after the hiatus, many experienced an avoidable shock.

There is no detectable trend – understanding US major-hurricane risk

As mentioned above, North American storms make up the majority of global insured losses, and yet properly adjusted losses for the US from Atlantic hurricanes over the last 120-plus years (1900–2021) show no trend in either direction.

From 2017 onwards, insured losses in five of the last six years have peaked at slightly above the long-term median. However, the previous decade saw no major landfalling hurricanes in the continental US for 11 years. Looking further back at the datasets for the top-10 costliest hurricanes since 1900, adjusted for 2022 societal conditions, only one hurricane from this century (Katrina 2005) has topped the US$ 100 billion mark, and nothing has come close to the US$ 260 billion losses likely to be suffered if the Great Miami hurricane of 1926 were to strike today. This was by far the costliest hurricane since records began, in 2022 values.

In the presence of such huge natural variability, the reinsurance industry should be wary of seeking out trends where none exists. According to scientists, if climate models are accurate, given that tropical cyclones are characterised by a high degree of variability, any anthropogenic signals would be impossible to detect for another century or more.

Indeed, as the IPCC points out, “there is low confidence in most reported long-term (multidecadal to centennial) trends in tropical cyclone frequency- or intensity-based metrics”. In short, concentrating on exposure and on reducing vulnerability, as is already underway (see below), will help insurers better predict hazard impacts and economic losses in the future by minimising the uncertainty and ignorance inherent in trend detection. As the University of Colorado’s Professor Roger Pielke notes, “the impact of hurricanes on society is overwhelmingly determined by how we build, where we build, what we build, what we place inside, and how we warn, shelter, evacuate, recover … That is in fact good news, because it tells us that the decisions we make (and don’t make) will determine the hurricane disasters of the future”. Not only is this true of all weather hazards but, more to the point, those factors will determine the insured losses of the future.

Vulnerability – flawed estimates and out-of-date risk assessments

Three main elements combine to create disaster risk; hazard, exposure and vulnerability. Hazards comprise meteorological or geological events that can endanger property or life; exposure refers to the placing of economic assets or humans in areas prone to hazard; and vulnerability describes the extent to which those human and economic assets are susceptible to the effects of hazards. Scientists define vulnerability as “the predisposition to incur loss, hence it is the component that has the potential to transform a natural hazard into a disaster”.

Disasters strike when a hazard affects vulnerable economic assets or populations. Until recently, there has been a poor appreciation of the fact that vulnerability is subject to huge variability; it is dynamic in both time and space, partly because it is influenced by adaptation efforts to minimise hazard impacts, as well as actions that minimise (or increase) exposure. It is also dependent on fast-changing economic and social realities, and is, of course, hazard-specific.

Scientists have made significant inroads into understanding the variability of vulnerability, and the results come as a surprise to some. Recent findings show that socioeconomic vulnerability, expressed as mortality rates over exposed populations, and economic losses over exposed GDP, has dropped over the course of the 35-year study period (1980–2016). Global mortality has seen a six-fold drop, while the economic loss rate (the ratio between economic loss suffered and exposed GDP) has seen a five-fold reduction.

In many ways, this should not come as a surprise, as enhanced adaptation and protection measures have more than outweighed increased exposure to disaster risk. The same scientists also demonstrate a clear negative relationship between vulnerability and wealth. “The reduction in mortality and loss rates with increasing wealth”, they report, “is evident both for the multi-hazard analysis as well as for the single hazards”. In other words, as wealth increases, vulnerability diminishes, despite increasing exposure of ever more valuable human and economic assets to single and multiple hazards. After all, wealthier developed nations are more likely to have invested in multi-natural-hazard protection and tend to have more effective early-warning systems and efficient post-disaster recovery plans.

What might this mean for the reinsurance industry? In the past, the industry has placed too much emphasis on the hazard element of disaster risk and catastrophe losses and too little on vulnerability and exposure. In other words, it has expended too much effort in a bid to guess the frequency and severity of future natural weather hazards, as well as trying to predict the impacts of climate change on the nature of these hazards. Munich Re’s chief climate scientist recently told reporters, “we can’t directly attribute any single severe weather event to climate change”. Yet, even if we could, that would not change the fact that the effect of the frequency and intensity of hazards greatly diminishes when vulnerability is reduced, and becomes immaterial when exposure and vulnerability can be factored (or discounted) through adaptation.

Vulnerability is a crucial tool in assessing potential loss risk, simply because vulnerability metrics are available and discoverable. The more effort and funding that governments, insurance companies and reinsurance companies put into measuring vulnerability, the greater their understanding and the lower their risk exposure. Conversely, attempting to predict future hazards, , is limited by our inability to predict the future with any meaningful accuracy.

Cutting through the noise

The catastrophic earthquake that struck Türkiye and Syria in February this year underlines that not all natural disasters are weather-related. No amount of climate-crisis number crunching can predict the incidence and severity of earthquakes. Given the human cost of the disaster in terms of lives, homes and livelihoods, it is not surprising that the tribulations of the region’s insurance and reinsurance markets have not made the headlines.

Most of the insured losses arising from the earthquake (estimated at US$ 1 billion) will be covered by international reinsurance markets, but the amount will be insignificant in a global context, and there are expected to be minimal implications for reinsurers’ ratings globally – although within the region itself, the asset and underwriting risks of many reinsurers are expected to increase, impacting their risk-adjusted capitalisation. The relatively low insurance coverage in the affected region is a significant factor in the calculations, and should also be taken as a wakeup call – if all the damaged properties had been insured in line with Türkiye’s technically mandatory earthquake insurance cover, the story would have been very different.

Credit rating agency AM Best underlines that Turkish reinsurers will continue to struggle – the earthquake occurred at a challenging time in Türkiye, which has seen significant inflation and a weakened currency in recent years (not to mention a major earthquake in 2020), while Syria is locked in civil war.

Away from the catastrophic random blow of earthquakes, the emerging trends in mortality and economic loss from natural catastrophes should be cause for cautious optimism, given that 2021 saw, with an approximate ratio of 1:1,300,000, the lowest ever reported weather-disaster mortality since reliable records began. Considering the vast natural variability of weather hazards, and the accompanying likelihood of experiencing a repeat of historical hazards in the future, the greatest chance the reinsurance industry and wider society has to keep those trends moving in a downward direction is to renew their focus on exposure and vulnerability.

The bottom line for reinsurers is this: even the fast pace of climate-change-model predictions for hazard losses cannot keep up with socioeconomic changes. Therefore, to avoid the likelihood of flawed and out-of-date risk assessment, it is vital that the industry seeks out and uses accurate and current data on vulnerability and exposure, data that will be inherently immune to uncertainty and fundamental ignorance.

If reinsurance companies can remain in business for longer than the natural world continues to disprove, invalidate or discredit human predictions, the reinsurance industry will have the opportunity to thrive and support insureds and the wider world in their understanding of long-term hazard variability.

1] https://www.hindawi.com/journals/amete/2020/8828421

[2] https://www.researchgate.net/publication/301363009_Comparison_between_the_2007_Cyclone_Gonu_Storm_Surge_and_the_2004_Indian_Ocean_Tsunami_in_Oman_and_Hormozgan_Iran

[3] https://climateknowledgeportal.worldbank.org/country/united-arab-emirates/vulnerability

[4] https://climateknowledgeportal.worldbank.org/country/united-arab-emirates/vulnerability

[5] https://climateknowledgeportal.worldbank.org/country/united-arab-emirates/vulnerability

[6] https://climateknowledgeportal.worldbank.org/country/united-arab-emirates/vulnerability